There was a time when the use of JavaScript in website and how it will impact the SEO was a highly debated topic in SEO circles. There existed a huge cloud of confusion among the SEO experts, regarding the ability of search engines to parse JavaScript and understand the website content.

But, can the search engines still parse JavaScript and rank websites using JavaScript to deliver their content?

Before finding an answer to this question, we must understand how JavaScript affect the way content is delivered in your website and how JavaScript is implemented. We must also understand how could search engines crawl and index web pages using JS code.

What is JavaScript?

JavaScript is one of the fundamental and popular programming languages used to create websites. JavaScript (JS) helps us to control the behaviors of each element in a web page, making that page more interactive and engaging to the user.

So, it will be a bad idea to completely discard JavaScript just for the sake of search engines being able to crawl and index a website. Considering the benefits and popularity of JavaScript, it will be more appropriate for the search engines to find a way to crawl and index websites using JavaScript.

In the early days, JavaScript was used only in the client side, in browsers (for rendering the data from server side). This was the root cause why search engines found it difficult to parse the content of some websites using JavaScript.

But now, JavaScript is being implemented in the server side as well (in the web servers and database). If the JavaScript is rendered on the server side there won’t be a problem for search engines to parse the content of websites using JavaScript. This whole problem started when JavaScript was implemented only for client side rendering.

Let’s see why was it difficult for the search engines to parse JavaScript when it is implemented only for the client side rendering. For this, we need to know how search engines work and how they treat JavaScript.

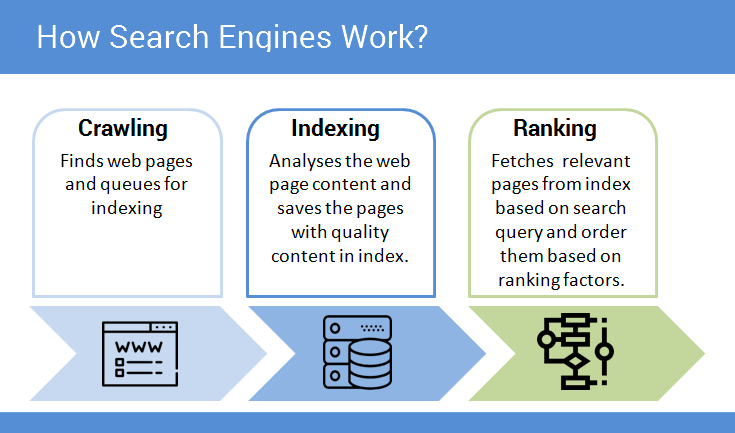

How Search Engines Works: Crawling, Indexing and Ranking

The basic functions of search engines for producing a meaningful search result is the following.

- Crawling

- Indexing

- Ranking

What is crawling in SEO?

Crawling is the process where Google discovers a page in your website. Google use web crawlers often called spiders for discovering web pages and updating their index on a regular basis.

Google have allotted every website a crawl budget, which essentially determines how often and how much pages should spiders crawl for that website. Google also find new websites from the external links pointing to those websites in the web pages which Google crawls.

The crawlers does not render pages which they crawl, instead it analyses the source code of the pages using a parsing module. Crawlers are able to validate html code and hyperlinks.

When you are browsing the Internet using Google, you are not actually able to search the entire Internet. You are only able to search the Google’s index of the web.

Search engine spiders or Googlebot (crawler of Google) conduct the crawling process. Whereas, the indexing is done by Google’s search engine algorithm named as caffeine.

What is indexing?

Indexing refers to the process of analyzing the contents and relevance of each crawled web page and adding that page into Google’s index of web pages which are eligible to feature in search results. In simple terms, web pages which are not indexed by Google will not appear in search results.

Unlike crawlers the indexation process involves rendering the page using web rendering service (WRS). Google’s Webmaster tool (currently known as search console) show you how Google renders your web page, using the Fetch and Render function.

The crawling and indexing process often works hand in hand.

Crawlers find pages and send it to the indexer. After analyzing the pages sent by crawler, the indexer feeds new urls found on that page back to the crawler, so that crawlers can prioritize the URLs found on a page based on their value.

Only pages that are crawled and indexed are eligible to be ranked and appear in search results.

How Google Ranks Web Pages?

Ranking process begins when a user makes a search. To serve the most useful and relevant search results, search engines must perform some calculations and retrieve the best possible results from their index of web pages.

The ranking process involves the below three critical steps.

- Analyzing the intent and context of the search query

- Identifying the web pages that are eligible to appear for the search result

- Ordering the eligible web pages based on their importance and relevance to the search query.

How crawlers and indexers handle JavaScript?

The whole debate on the ability of search engines to index the JavaScript pages started when SEO experts started confusing Googlebot with the Caffeine (index algorithm)

The answer to this question is very simple. Yes, Google can render JavaScript and index the web page using JavaScript like any other web pages.

But the crawlers do not render JavaScript or any other pages. The process involved in producing the search result is very complex. Multiple algorithms are working together to deliver the best result for a query. Since the website is rendered in indexation process by search engines, we can safely say that JavaScript is readable by Google.

How JavaScript really affect SEO efforts?

Using JavaScript has its merits; the loading time of the pages can be increased significantly by the use of JavaScript. The interface can be made more interactive and engaging through the use of JavaScript. But, let’s take a look at the issues created by web page content that use JavaScript.

We have already made it clear that the JavaScript is taken care of during the indexation of web pages.

But does it really matter that JavaScript is ignored during crawling?

Does JavaScript affect SEO in any way during crawling?

Is it important to know that crawlers do not render JavaScript?

Yes, it is important to know that crawlers do not render JavaScript and the difference between crawling and indexing in seo. There might arise cases which can lead to errors if you don’t know the difference between crawling and indexing of websites.

The key thing to understand here is that JavaScript websites are indexed and ranked. But it is difficult for the search engines to understand the JavaScript generated content. You need to make the implementation of JavaScript very seo friendly. It should be easier for search engines to understand the content delivered using JavaScript.

Websites using JavaScript are often affected by the wrong implementation of JavaScript, not because Google is unable to deal with JavaScript.

How to implement JavaScript in an SEO friendly way?

In 2009, Google had publicly stated (in this official article) that AJAX applications are difficult to process by the search engine as AJAX content is dynamically created by the browser and hence not visible to crawlers.

Later in 2015 Google rectified this issue and made it clear that they are able to render and index JavaScript based web pages unless you block googlebot from crawling JavaScript or CSS files. (Refer this official statement from google)

Using JavaScript for building website structure

It is recommended to always use HTML for creating a websites basic structure. You can use JavaScript or AJAX to deliver content or make the appearance more appealing after laying the basic structure using HTML. Doing this will make sure that Googlebot is able to access the HTML and users can be benefited from the JavaScript.

We will look in to some of the common errors that SEO’s make while dealing with JavaScript. This will give you a good understanding of things you must avoid while implementing JavaScript.

Serving a pre-rendered page from server

Usually, websites using JavaScript is rendered in the client side (normally a web browser). Pre-rendering means, loading all the elements of a web page from server before the data is transmitted to the browser. When you implement pre-rendering, you should follow the Google’s quality guidelines and show the same content for all users.

Some websites using JavaScript tends to pre-render only for the search engine crawlers, which is against the quality guidelines. Essentially, pre-rendering can be used to check the user-agent which sent the request for a page and if that is a search engine crawler like Googlebot, server could provide a pre-rendered image.

This practice is not recommended for web pages using JavaScript. You should ideally deliver same content to all the user-agents accessing your web page. Having different content for different user agents means cloaking, which is a bad practice that could flag severe penalties.

Tools for fixing SEO issues with JavaScript

There are some tools with which we can make the implementation of JavaScript to deliver website content error free. You can make sure that the JavaScript used in website is easily crawled by search engines like Google if you use any of the following JavaScript frameworks.

- Pre-render

- Angular JS SEO

- Backbone JS SEO

- SEO.JS

- BromBone

Conclusion

You can always make sure whether your website can be crawled or indexed by Google using the Fetch and Render tool available in Google Search Console. This is a highly recommended practice if your website used JavaScript for delivering content. Use of JavaScript to deliver content does not limit your SEO opportunities, However you should make sure that the JavaScript is implemented in an SEO friendly manner and does not violate Google’s guidelines and best practices.

Contributed byhttps://www.globalmediainsight.com/